Using A/B Testing in Marketing takes center stage, inviting readers into a world of strategy and innovation. Get ready to dive deep into the realm of marketing experiments that drive real results.

A/B testing isn’t just a buzzword – it’s a powerful tool that can transform your marketing game. From optimizing conversion rates to minimizing risks, this method offers a data-driven approach to success.

Introduction to A/B Testing in Marketing

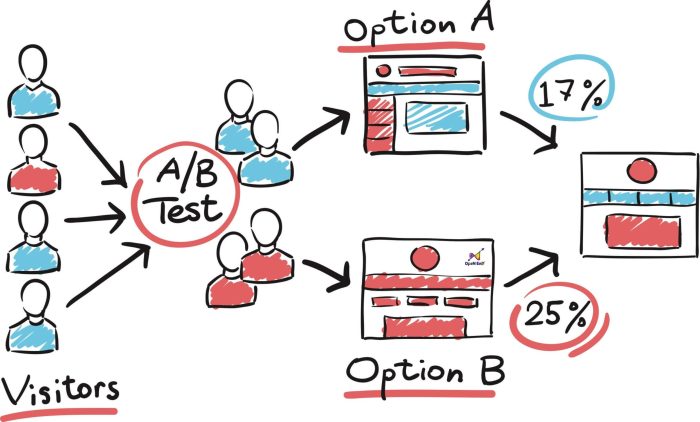

A/B testing, also known as split testing, is a method used in marketing to compare two versions of a webpage, email, or ad to determine which one performs better. This is done by showing both versions to different segments of the audience and analyzing the results to see which version drives more conversions or engagement.

How A/B Testing is Used in Marketing Campaigns

A/B testing allows marketers to make data-driven decisions by testing different variations of their campaigns to see which one resonates best with their target audience. By testing elements like headlines, call-to-action buttons, images, and even colors, marketers can optimize their campaigns for better performance.

- Testing different subject lines in email campaigns to see which one has a higher open rate

- Comparing two versions of a landing page to see which one generates more leads

- Testing different ad copies to see which one has a higher click-through rate

By using A/B testing, marketers can improve the effectiveness of their campaigns and ultimately drive better results.

Benefits of Using A/B Testing in Marketing

A/B testing in marketing offers several advantages that can significantly impact the success of marketing strategies. By testing different variations of content, design, or features, businesses can gain valuable insights that help in making informed decisions to enhance performance and achieve better results.

Optimizing Conversion Rates

- A/B testing allows marketers to experiment with different elements of a campaign, such as headlines, calls-to-action, or images, to determine which combination yields the highest conversion rates.

- By analyzing the results of A/B tests, marketers can identify the most effective strategies for engaging with their target audience and driving them towards desired actions, ultimately leading to higher conversion rates.

- Optimizing conversion rates through A/B testing can help businesses increase their return on investment (ROI) and maximize the impact of their marketing efforts.

Reducing Risks in Marketing Decisions, Using A/B Testing in Marketing

- One of the key benefits of A/B testing is that it enables businesses to make data-driven decisions based on real-time results, rather than relying on assumptions or guesswork.

- By testing different variations on a smaller scale before implementing changes on a larger scale, companies can minimize the risks associated with making major marketing decisions that could potentially harm their brand reputation or sales.

- A/B testing provides a safety net for marketers by allowing them to validate their ideas and strategies through empirical evidence, ensuring that their efforts are aligned with the preferences and behavior of their target audience.

Setting Up A/B Tests

When setting up an A/B test, there are several key steps to follow in order to ensure accurate results and meaningful insights. It’s important to define clear objectives for the test and carefully select variables to experiment with.

Defining Clear Objectives for A/B Testing

To start off, it’s crucial to clearly Artikel what you hope to achieve through the A/B test. Whether it’s increasing click-through rates, improving conversion rates, or enhancing user engagement, setting specific and measurable goals will guide the entire testing process.

- Identify the key metrics you want to measure and improve.

- Establish a baseline performance to compare test results against.

- Determine the target audience for the test and what actions you want them to take.

- Ensure that the objectives align with your overall marketing strategy and business goals.

Selecting Variables to Test in A/B Experiments

When choosing what variables to test in your A/B experiments, it’s important to focus on elements that are likely to have a significant impact on the desired outcomes. Here are some tips for selecting variables to test:

- Start with high-impact areas such as headline, call-to-action buttons, or visuals.

- Avoid testing multiple variables at once to accurately attribute changes to specific elements.

- Consider the potential impact of each variable on the user experience and conversion rates.

- Ensure that the variables you choose are relevant to the objectives of the test.

Analyzing A/B Test Results

When it comes to analyzing A/B test results in marketing, it is crucial to carefully examine the data to draw meaningful insights that can inform future strategies. By understanding the process of analyzing A/B test data, interpreting statistical significance, and following best practices, marketers can make informed decisions to optimize their campaigns and drive better results.

Process of Analyzing A/B Test Data

- Collect all relevant data points from the A/B test, including conversion rates, click-through rates, bounce rates, and any other metrics that were measured.

- Organize the data in a clear and structured manner, such as creating tables or graphs to visualize the results effectively.

- Analyze the data to identify trends, patterns, and differences between the control group (A) and the variant group (B).

- Calculate key performance indicators (KPIs) to compare the success of each variation and determine which one performed better.

- Draw conclusions based on the data analysis and use these insights to optimize future marketing campaigns.

Statistical Significance in A/B Testing Results

- Statistical significance plays a crucial role in A/B testing results as it helps determine whether the differences observed between the control and variant groups are due to chance or actual impact.

- It is important to set a confidence level (usually 95% or higher) to determine the statistical significance of the results.

- Statistical significance ensures that the findings are reliable and not influenced by random fluctuations in the data.

- When analyzing A/B test results, always consider the statistical significance to make informed decisions based on concrete evidence.

Best Practices for Interpreting and Acting on A/B Test Findings

- Focus on the primary goal of the A/B test and prioritize the metrics that directly impact this goal.

- Avoid making decisions based on isolated results and consider the overall performance of each variation.

- Iterate and test continuously by implementing the learnings from A/B tests to refine future campaigns and strategies.

- Document the results and insights gained from A/B testing to build a knowledge base for future reference and decision-making.

Common Mistakes to Avoid in A/B Testing

When it comes to A/B testing in marketing, there are some common mistakes that marketers should steer clear of to ensure accurate results and meaningful insights. Let’s dive into some of these pitfalls and why it’s crucial to test one variable at a time.

Avoid Testing Multiple Variables Simultaneously

- One of the biggest mistakes in A/B testing is changing multiple variables at once. This can lead to confusion about which element actually impacted the results.

- For example, if you change both the headline and the call-to-action button on a landing page, and see an increase in conversions, you won’t know which change was responsible for the improvement.

- It’s essential to isolate variables to accurately measure their impact on the outcomes.

Ignoring Sample Size and Statistical Significance

- Another common mistake is not considering the sample size and statistical significance when interpreting A/B test results.

- Small sample sizes can lead to unreliable results, while failing to account for statistical significance can result in drawing conclusions based on random chance.

- It’s crucial to ensure that your test has a large enough sample size to produce meaningful results and to verify that any differences observed are statistically significant.

Overlooking Seasonality and External Factors

- Marketers often make the mistake of overlooking external factors such as seasonality, holidays, or marketing campaigns running concurrently with the A/B test.

- These external influences can skew the results and lead to incorrect conclusions about the effectiveness of the changes being tested.

- It’s important to consider external factors and control for them as much as possible to get accurate insights from A/B testing.